Making a Personal AI Sunglass Shopping Assistant in Python and React

Oct 2, 2024

Recently I was tasked with learning how to work with large language models (LLMs), and immediately I was intrigued by their vision capabilities. I started to think about where this could be applied in a practical context. Previously, I had made a chatbot product search app for sunglasses, and it seemed obvious that combining AI vision to make it more interesting could be a good starting point.

I would have the AI model try to do the following:

- Receive a product image and generate a description, as well as extract keywords that could be used as product tags.

- Receive an image of a user’s face and identify the corresponding face shape.

- Use supplementary information about sunglasses styles and complementary face shapes in order to create accurate product recommendations.

While there are already advanced apps out there for analyzing face shapes and recommending products, I wanted to see what I could learn by making this simple test. Also, I was curious about how accurate a straightforward implementation could be using modern tools like GPT-4o vision. The results, I found, were surprisingly decent.

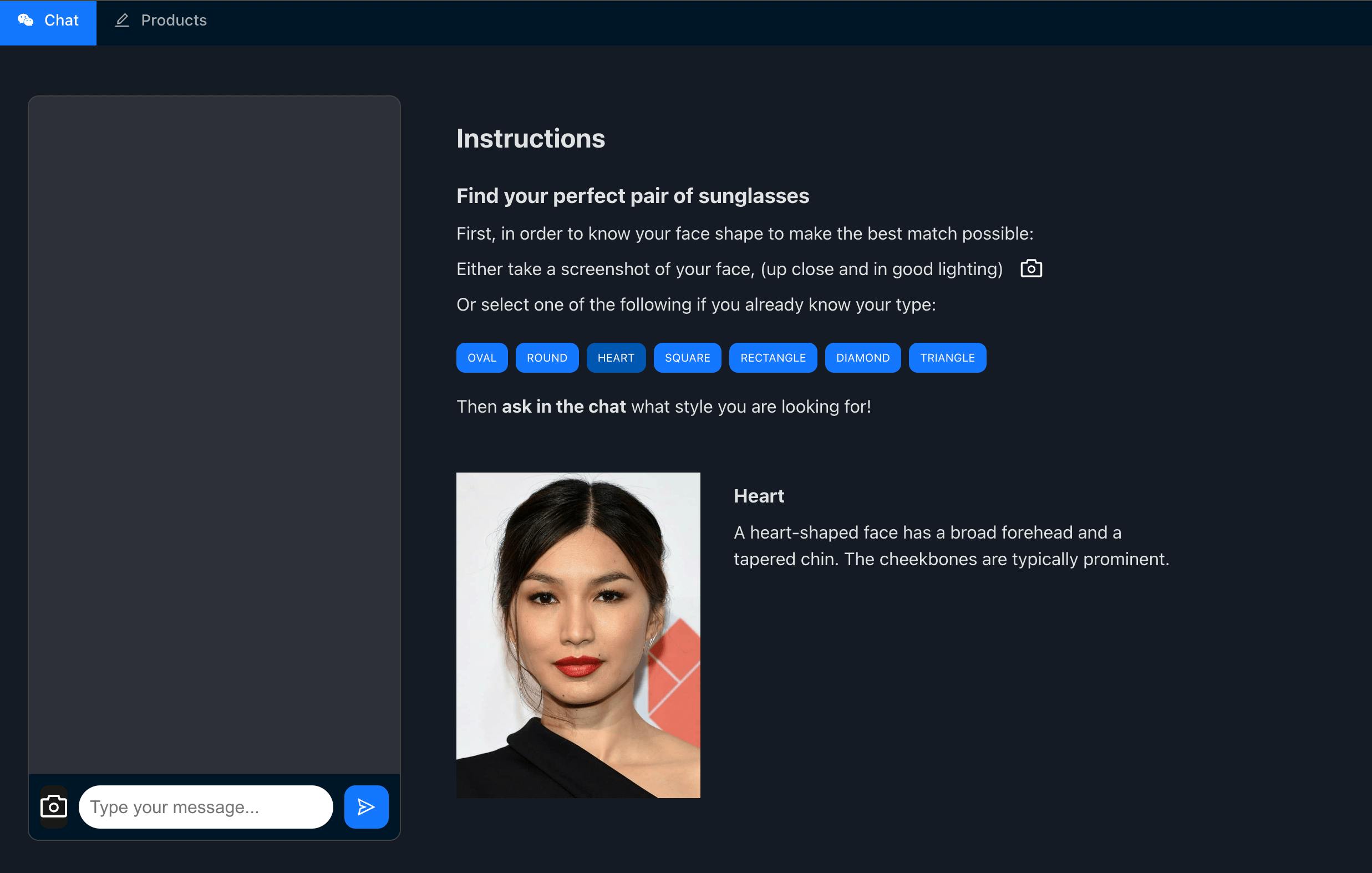

Let’s take a look at the app in more detail. It has two main parts:

- An admin interface for uploading products.

- A shopping assistant that helps users find the best product matches based on their face shape.

For the backend I spun up a Python Flask API connected to Supabase, and the frontend I used React with Vite.

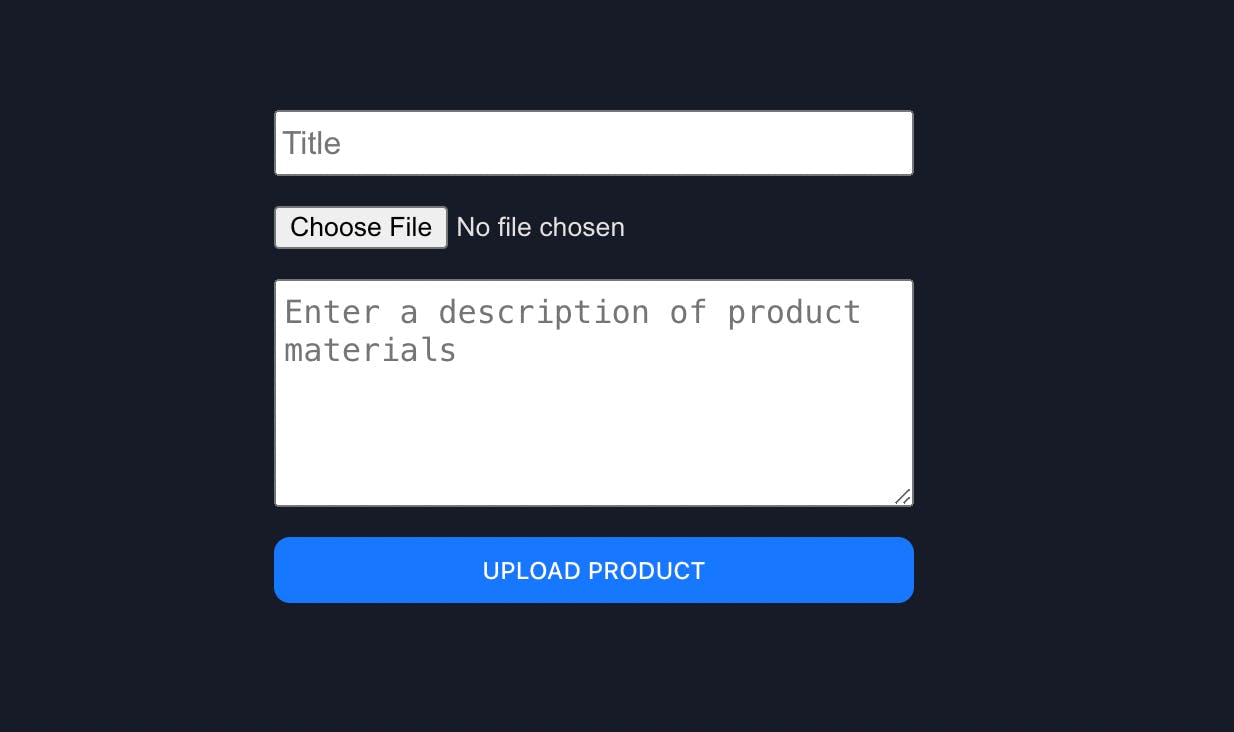

Part One: Product Admin

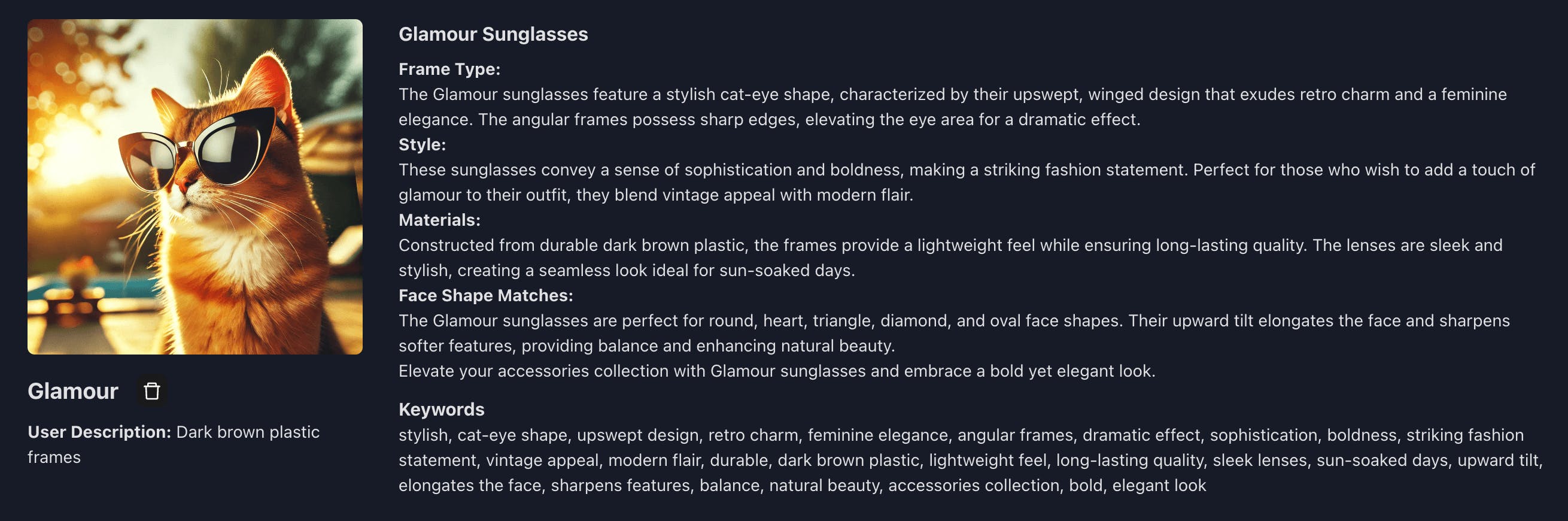

Goal: Upload product images, generate descriptions and create tags.

The product admin section serves as the backend for uploading sunglasses. The idea was to use the AI to produce comprehensive descriptions that highlight the product's features. Additionally, the model generates relevant keywords (or product tags) from the descriptions, something that would be useful if the app were expanded to use filtering or search by tags. These descriptions are stored in the database, both as text and as vector embeddings, which are later used to perform similarity searches when customers make queries.

I found GPT-4’s vision on its own to be mostly accurate, but it struggled with material differentiation (e.g., plastic vs. wire frames). To address this, I added a field where users could input extra details, which the model easily integrates. The other challenge I encountered was that the descriptions could be a bit too general or inventive, and I wanted to follow some established categorization of lens types. To fine-tune this, I incorporated a sunglasses guide into the prompt, outlining various frame shapes and the face types they best complement.

I also tested the CLIP model, but in this particular use case, GPT-4 vision provided comparable results, and it was much easier to integrate since I could pass images directly to the model without needing to manage temporary downloads or file handling.

In the prompt I included formatting notes, so the output could be parsed by a React markdown formatter in the frontend, for a neater looking presentation. In the keywords prompt, I needed to ask it to exclude generic words like "sunglasses" and the face shapes, otherwise those always appeared.

sunglass_styles_prompt = f"""

Create a product description for a pair of sunglasses based on the image.

The title of the product is {title}. The materials used in the construction of the sunglasses are in the {user_description}.

Explain the style, shape, and details of the sunglasses based on what you see in the image, using the this guide to sunglasses to create a description {sunglass_styles}

Include the following in the final product description, but be concise and try to use only what you see in the image, the styles guide, and user description. Make sure to include the shape.

Frame types: Describe the specific shape and style of the frames.

Style: Comment on the overall aesthetic.

Materials: Describe the materials, lens types and colors.

Face shape matches: What face shapes are best and why. Include all the best for recommendations in the sunglass styles guide.

Use an ### for the first title and ** for the bolded subtitles.

"""Some example results:

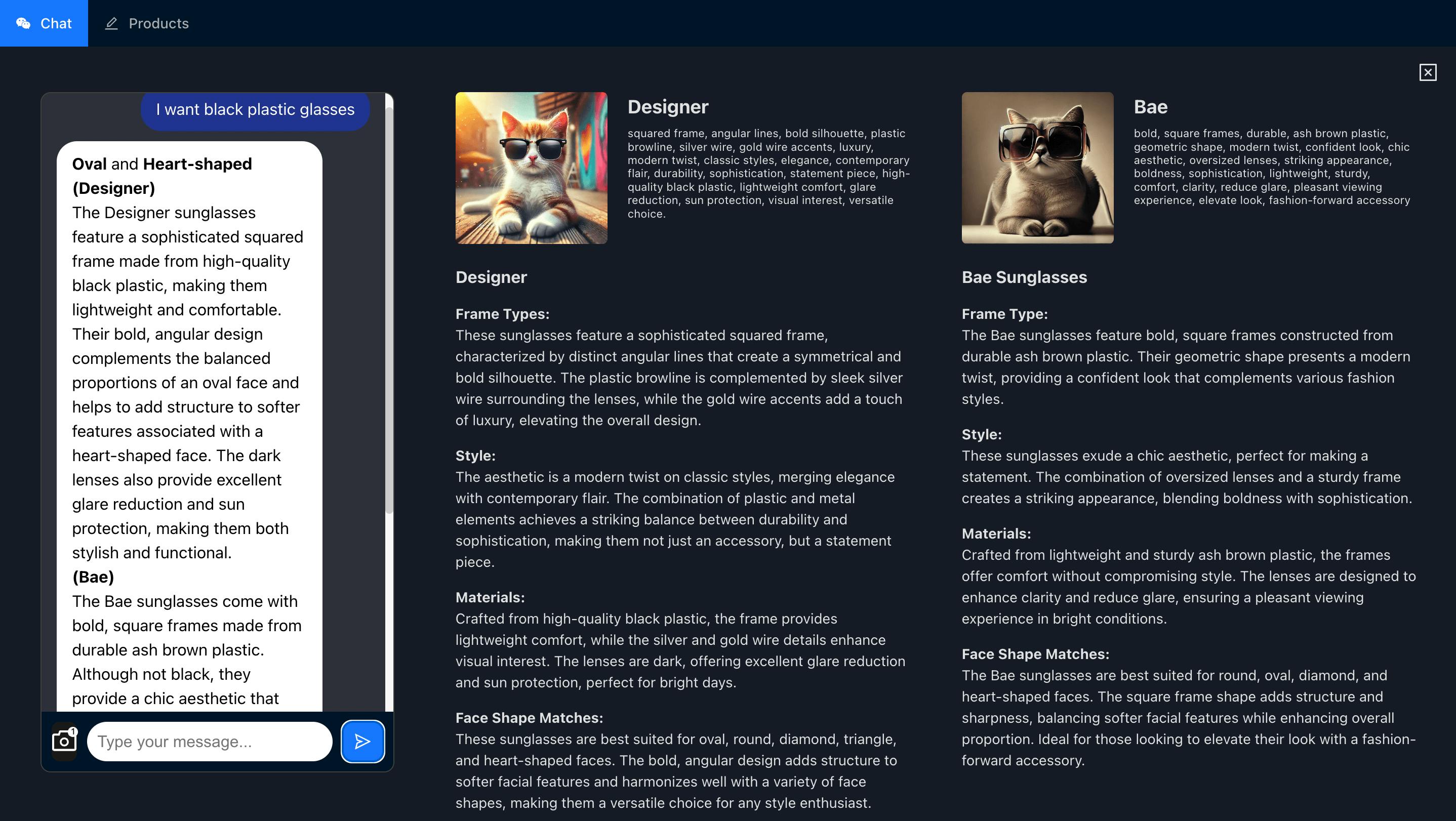

Part Two: Shopping Assistant

Goal: Create a chatbot search for sunglasses that enhances results by factoring in the user’s face shape.

In the shopping assistant part of the app, I wanted to do more than just a simple product search. The idea was to create a personalized experience by recommending sunglasses that suited the user’s face shape. Since many people are unsure of their face shape, I decided to integrate a webcam feature that captures an image of their face, allowing GPT-4 vision to analyze the shape. For users who prefer not to use the webcam, or who would be simply curious to read more about the different shapes, I added a face shape selector where they can manually choose their face type.

The face shape data and the user’s search query is then sent to the API. Initially, I experimented with combining the face shape and query into one cosine search but found that this favored face shape over the actual product features the user was requesting (e.g., plastic frames, mirrored lens, etc.) To address this, I implemented a two-step process: first, the backend performs a cosine similarity search based on the user’s query, returning four possible products, and then the model chooses two by identifying which are the best matches for the user’s face shape.

prompt_text = """

You are a helpful shopping assistant trying to match customers with the right product.

You will be given a {question} from a customer, and a list of {recommended_products} of the products available for sale that roughly match the customer's question.

Among the products given, find two that best match their question but also their face shapes based on this recommendation about what style of glasses look best for their face shapes {shape_recomendation}.

If there are none matching their question, apologize and make an alternative recommendation that is the closest match to their request.

Respond with the one or two face shapes identified in the shape recommendation using <strong> tags, no parantheses. If there are two face shapes, name the first as the primary face shape and the second as the secondary one.

Then give the two best product matches, with the title of each product, then a short summary of why the product is a good match for the customer, including what makes it appropriate for the face shape.

Wrap the title in a strong tag, like <strong>(title)</strong> and add a <br> before and after it. Use the product's id wrapped in a <span> tag with the className='hidden' and the id='product-id'

"""To create an accurate analysis for the face shape, I found a couple steps necessary. In the prompt I provide a guide for the AI explaining which shapes to choose from and how they are measured. I also ask the AI to return the top two face shapes, because in many cases people are a combination, so this gives a more accurate result in the end. In my case, with only one result returned, it was giving me Oval most of the time, and then occasionally Heart Shaped, when I’m really more 50/50 between the two. When I ask for the closest two results, it always identifies Oval and Heart Shaped.

In order to retrieve the product data on the frontend, I added a hidden product ID field in the AI’s chat response. This allows the backend to parse the chat output, find the correct products in the database, and return them as an array in the API response. On the frontend, this array is stored in the state along with the chat messages, so I can display the recommended products side by side with the AI’s suggestions in the chat window.

Conclusion

In the end, I was quite impressed with how well GPT-4 vision performed. Despite being a relatively simple implementation, the accuracy of the recommendations was good enough to be useful, and I can see potential to refine it further with a larger product catalog and more sophisticated prompts.

Of course, the only problem with making this test is that with the token cost of running vision, it's impractical to publish as a public app. However, I have the code open and free to use—you can plug in your own API keys and experiment away.

React frontend:

Python backend: